GDC 2018 Tech Sessions

Using the Model Optimizer to Convert MXNet* Models

Introduction

NOTE: The OpenVINO™ toolkit was formerly known as the Intel® Computer Vision SDK.

The Model Optimizer is a cross-platform command-line tool that facilitates the transition between the training and deployment environment, performs static model analysis, and adjusts deep learning models for optimal execution on end-point target devices.

The Model Optimizer process assumes you have a network model trained using a supported frameworks. The scheme below illustrates the typical workflow for deploying a trained deep learning model:

A summary of the steps for optimizing and deploying a model that was trained with the MXNet* framework:

- Configure the Model Optimizer for MXNet* (MXNet was used to train your model).

- Convert a MXNet model to produce an optimized Intermediate Representation (IR) of the model based on the trained network topology, weights, and biases values.

- Test the model in the Intermediate Representation format using the Inference Engine in the target environment via provided Inference Engine validation application or sample applications.

- Integrate the Inference Engine in your application to deploy the model in the target environment.

Model Optimizer Workflow

The Model Optimizer process assumes you have a network model that was trained with one of the supported frameworks. The workflow is:

- Configure Model Optimizer for the TensorFlow* framework running the configuration bash script (Linux*) or batch file (Windows*) from the

<INSTALL_DIR>/deployment_tools/model_optimizer/install_prerequisitesfolder:install_prerequisites_mxnet.shinstall_prerequisites_mxnet.bat

For more details on configuring the Model Optimizer, see Configure the Model Optimizer.

- Provide as input a trained model that contains the certain topology, described in the

.jsonfile, and the adjusted weights and biases, described in.params. - Convert the MXNet* model to optimized Intermediate Representation.

The Model Optimizer produces as output an Intermediate Representation (IR) of the network can be read, loaded, and inferred with the Inference Engine. The Inference Engine API offers a unified API across a number of supported Intel® platforms. The Intermediate Representation is a pair of files that describe the whole model:

.xml: Describes the network topology.bin: Contains the weights and biases binary data

Supported Topologies

The table below shows the supported models, with the links to the symbol and parameters.

| Model Name | Model |

|---|---|

| VGC-16 | Symbol, Params |

| VGC-19 | Symbol, Params |

| ResNet-152 v1 | Symbol, Params |

| SqueezeNet_v1.1 | Symbol, Params |

| Inception BN | Symbol, Params |

| CaffeNet | Symbol, Params |

| DenseNet-121 | Repo |

| DenseNet-161 | Repo |

| DenseNet-169 | Symbol, Params |

| DenseNet-201 | Repo |

| MobileNet | Repo, Params |

| SSD-ResNet-50 | Repo, Params |

| SSD-VGG-16-300 | Symbol + Params |

| SSD-Inception v3 | Symbol + Params |

| Fast MRF CNN (Neural Style Transfer) | Repo |

| FCN8 (Semantic Segmentation) | Repo |

Converting an MXNet* Model

To convert an MXNet model:

- Go to the

<INSTALL_DIR>/deployment_tools/model_optimizerdirectory. - To convert an MXNet* model contained in a

model-file-symbol.jsonandmodel-file-0000.params, run the Model Optimizer launch scriptmo.py, specifying a path to the input model file:python3 mo_mxnet.py --input_model model-file-0000.params

Two groups of parameters are available to convert your model:

- Framework-agnostic parameters: These parameters are used to convert any model trained in any supported framework.

- MXNet-specific parameters: Parameters used to convert only MXNet* models

Using Framework-Agnostic Conversion Parameters

The following list provides the framework agnostic parameters. The MXNet-specific list is further down in this document.

Optional arguments:

-h, --help Shows this help message and exit

--framework {tf,caffe,mxnet}

Name of the framework used to train the input model.

Framework-agnostic parameters:

--input_model INPUT_MODEL, -w INPUT_MODEL, -m INPUT_MODEL

Tensorflow*: a file with a pre-trained model (binary

or text .pb file after freezing). Caffe*: a model

proto file with model weights

--model_name MODEL_NAME, -n MODEL_NAME

Model_name parameter passed to the final create_ir

transform. This parameter is used to name a network in

a generated IR and output .xml/.bin files.

--output_dir OUTPUT_DIR, -o OUTPUT_DIR

Directory that stores the generated IR. By default, it

is the directory from where the Model Optimizer is

launched.

--input_shape INPUT_SHAPE

Input shape that should be fed to an input node of the

model. Shape is defined in the '[N,C,H,W]' or

'[N,H,W,C]' format, where the order of dimensions

depends on the framework input layout of the model.

For example, [N,C,H,W] is used for Caffe* models and

[N,H,W,C] for TensorFlow* models. Model Optimizer

performs necessary transforms to convert the shape to

the layout acceptable by Inference Engine. Two types

of brackets are allowed to enclose the dimensions:

[...] or (...). The shape should not contain undefined

dimensions (? or -1) and should fit the dimensions

defined in the input operation of the graph.

--scale SCALE, -s SCALE

All input values coming from original network inputs

will be divided by this value. When a list of inputs

is overridden by the --input parameter, this scale is

not applied for any input that does not match with the

original input of the model.

--reverse_input_channels

Switches the input channels order from RGB to BGR.

Applied to original inputs of the model when and only

when a number of channels equals 3

--log_level {CRITICAL,ERROR,WARN,WARNING,INFO,DEBUG,NOTSET}

Logger level

--input INPUT The name of the input operation of the given model.

Usually this is a name of the input placeholder of the

model.

--output OUTPUT The name of the output operation of the model. For

TensorFlow*, do not add :0 to this name.

--mean_values MEAN_VALUES, -ms MEAN_VALUES

Mean values to be used for the input image per

channel. Shape is defined in the '(R,G,B)' or

'[R,G,B]' format. The shape should not contain

undefined dimensions (? or -1). The order of the

values is the following: (value for a RED channel,

value for a GREEN channel, value for a BLUE channel)

--scale_values SCALE_VALUES

Scale values to be used for the input image per

channel. Shape is defined in the '(R,G,B)' or

'[R,G,B]' format. The shape should not contain

undefined dimensions (? or -1). The order of the

values is the following: (value for a RED channel,

value for a GREEN channel, value for a BLUE channel)

--data_type {FP16,FP32,half,float}

Data type for input tensor values.

--disable_fusing Turns off fusing of linear operations to Convolution

--disable_gfusing Turns off fusing of grouped convolutions

--extensions EXTENSIONS

Directory or list of directories with extensions. To

disable all extensions including those that are placed

at the default location, pass an empty string.

--batch BATCH, -b BATCH

Input batch size

--version Version of Model OptimizerNote: The Model Optimizer does not revert input channels from RGB to BGR by default, as it did in the 2017 R3 Beta release. Instead, manually specify the command-line parameter to perform the reversion: --reverse_input_channels

Command-Line Interface (CLI) Examples Using Framework-Agnostic Parameters

- Launching the Model Optimizer for

model.paramswith debug log level: Use this to better understand what is happening internally when a model is converted:python3 mo_mxnet.py --input_model model.params --log_level DEBUG

- Launching the Model Optimizer for

model.paramswith the output Intermediate Representation calledresult.xmlandresult.binthat are placed in the specified../../models/:python3 mo_mxnet.py --input_model model.params --model_name result --output_dir ../../models/

- Launching the Model Optimizer for

model.paramsand providing scale values for a single input:python3 mo_mxnet.py --input_model model.params --scale_values [59,59,59]

- Launching the Model Optimizer for

model.caffemodelwith two inputs with two sets of scale values for each input. A number of sets of scale/mean values should be exactly the same as the number of inputs of the given model:python3 mo_mxnet.py --input_model model.params --input data,rois --scale_values [59,59,59],[5,5,5]

- Launching the Model Optimizer for

model.paramswith specified input layer (data), changing the shape of the input layer to[1,3,224,224], and specifying the name of the output layer:python3 mo_mxet.py --input_model model.params --input data --input_shape [1,3,224,224] --output pool5

- Launching the Model Optimizer for

model.paramswith disabled fusing for linear operations with convolution, set by the--disable_fusingflag, and grouped convolutions, set by the--disable_gfusingflag:python3 mo_mxnet.py --input_model model.params --disable_fusing --disable_gfusing

- Launching the Model Optimizer for

model.caffemodel, reversing the channels order between RGB and BGR, specifying mean values for the input and the precision of the Intermediate Representation to beFP16:python3 mo_mxnet.py --input_model model.params --reverse_input_channels --mean_values [255,255,255] --data_type FP16

- Launching the Model Optimizer for

model.paramswith extensions from specified directories. In particular, from/home/and from/home/some/other/path.

In addition, the following command shows how to pass the mean file to the Intermediate Representation. The mean file must be in abinaryprotoformat:python3 mo_mxnet.py --input_model model.params --extensions /home/,/some/other/path/ --mean_file mean_file.binaryproto

Using MXNet*-Specific Conversion Parameters

The following list provides the MXNet*-specific parameters.

Mxnet-specific parameters:

--nd_prefix_name ND_PREFIX_NAME

Prefix name for args.nd and argx.nd files.

--pretrained_model_name PRETRAINED_MODEL_NAME

Name of pretrained model without extension and epoch

number which will be merged with args.nd and argx.nd

files.Custom Layer Definition

Internally, when you run the Model Optimizer, it loads the model, goes through the topology, and tries to find each layer type in a list of known layers. Custom layers are layers that are not included in the list of known layers. If your topology contains any layers that are not in this list of known layers, the Model Optimizer classifies them as custom.

Supported Layers and the Mapping to Intermediate Representation Layers

| Number | Layer Name in MXNet | Layer Name in the Intermediate Representation |

|---|---|---|

| 1 | BatchNorm | BatchNormalization |

| 2 | Crop | Crop |

| 3 | ScaleShift | ScaleShift |

| 4 | Pooling | Pooling |

| 5 | SoftmaxOutput | SoftMax |

| 6 | SoftmaxActivation | SoftMax |

| 7 | null | Ignored, does not appear in IR |

| 8 | Convolution | Convolution |

| 9 | Deconvolution | Deconvolution |

| 10 | Activation | ReLU |

| 11 | ReLU | ReLU |

| 12 | LeakyReLU | ReLU (negative_slope = 0.25) |

| 13 | Concat | Concat |

| 14 | elemwise_add | Eltwise(operation = sum) |

| 15 | _Plus | Eltwise(operation = sum) |

| 16 | Flatten | Flatten |

| 17 | Reshape | Reshape |

| 18 | FullyConnected | FullyConnected |

| 19 | UpSampling | Resample |

| 20 | transpose | Permute |

| 21 | LRN | Norm |

| 22 | L2Normalization | Normalize |

| 23 | Dropout | Ignored, does not appear in IR |

| 24 | _copy | Ignored, does not appear in IR |

| 25 | _contrib_MultiBoxPrior | PriorBox |

| 26 | _contrib_MultiBoxDetection | DetectionOutput |

| 27 | broadcast_mul | ScaleShift |

The current version of the Model Optimizer for MXNet does not support models that contain custom layers. The general recommendation is to cut the model to remove the custom layer, and then reconvert the cut model, without the custom layer. If the custom layer is the last layer in the topology, then the processing logic can be made on the level of the Inference Engine sample that you will use when inferring the model.

Frequently Asked Questions (FAQ)

The Model Optimizer provides explanatory messages if it is unable to run to completion due to issues like typographical errors, incorrectly used options, or other issues. The message describes the potential cause of the problem and gives a link to the Model Optimizer FAQ. The FAQ has instructions on how to resolve most issues. The FAQ also includes links to relevant sections in the Model Optimizer Developer Guide to help you understand what went wrong. The FAQ is here: https://software.intel.com/en-us/articles/OpenVINO-ModelOptimizer#FAQ.

Summary

In this document, you learned:

- Basic information about how the Model Optimizer works with MXNet* models

- Which MXNet* models are supported

- How to convert a trained MXNet* model using the Model Optimizer with both framework-agnostic and MXNet-specific command-line options

Legal Information

You may not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein.

No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document.

All information provided here is subject to change without notice. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps.

The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request.

Intel technologies’ features and benefits depend on system configuration and may require enabled hardware, software or service activation. Learn more at http://www.intel.com/ or from the OEM or retailer.

No computer system can be absolutely secure.

Intel, Arria, Core, Movidia, Pentium, Xeon, and the Intel logo are trademarks of Intel Corporation in the U.S. and/or other countries.

OpenCL and the OpenCL logo are trademarks of Apple Inc. used with permission by Khronos

*Other names and brands may be claimed as the property of others.

Copyright © 2018, Intel Corporation. All rights reserved.

IoT Reference Implementation: How to Build a Store Traffic Monitor

An application capable of detecting objects on any number of screens.

What it Does

This application is one of a series of IoT reference implementations aimed at instructing users on how to develop a working solution for a particular problem. It demonstrates how to create a smart video IoT solution using Intel® hardware and software tools. This reference implementation monitors people activity inside and outside a facility as well as counts product inventory.

How it Works

The counter uses the Inference Engine included in OpenVINO™. A trained neural network detects objects within a designated area by displaying a green bounding box over them. This reference implementation identifies multiple intruding objects entering the frame and identifies their class, count, and time entered.

Requirements

Hardware

- 6th Generation Intel® Core™ processor with Intel® Iris® Pro graphics and Intel® HD Graphics.

Software

- Ubuntu* 16.04 LTS Note: You must be running kernel version 4.7+ to use this software. We recommend using a 4.14+ kernel to use this software. Run the following command to determine your kernel version:

uname -a

- OpenCL™ Runtime Package

- OpenVINO™

IoT Reference Implementation: How to Build an Intruder Detector Solution

An application capable of detecting any number of objects from a video input.

What it Does

This application is one of a series of IoT reference implementations aimed at instructing users on how to develop a working solution for a particular problem. It demonstrates how to create a smart video IoT solution using Intel® hardware and software tools. This solution detects any number of objects in a designated area providing number of objects in the frame and total count.

How it Works

The counter uses the Inference Engine included in OpenVINO™. A trained neural network detects objects within a designated area by displaying a green bounding box over them, and registers them in a logging system.

Requirements

Hardware

- 6th Generation Intel® Core™ processor with Intel® Iris® Pro graphics and Intel® HD Graphics

Software

- Ubuntu* 16.04 LTS Note: You must be running kernel version 4.7+ to use this software. We recommend using a 4.14+ kernel to use this software. Run the following command to determine your kernel version:

uname -a

- OpenCL™ Runtime Package

- OpenVINO™

IoT Reference Implementation: How to Build a Face Access Control Solution

Introduction

The Face Access Control application is one of a series of IoT reference implementations aimed at instructing users on how to develop a working solution for a particular problem. The solution uses facial recognition as the basis of a control system for granting physical access. The application detects and registers the image of a person’s face into a database, recognizes known users entering a designated area and grants access if a person’s face matches an image in the database.

From this reference implementation, developers will learn to build and run an application that:

- Detects and registers the image of a person’s face into a database

- Recognizes known users entering a designated area

- Grants access if a person’s face matches an image in the database

How it Works

The Face Access Control system consists of two main subsystems:

cvservice

- cvservice is a C++ application that uses OpenVINO™. It connects to a USB camera (for detecting faces) and then performs facial recognition based on a training data file of authorized users to determine if a detected person is a known user or previously unknown. Messages are published to a MQTT* broker when users are recognized and the processed output frames are written to stdout in raw format (to be piped to ffmpeg for compression and streaming). Here, Intel's Photography Vision Library is used for facial detection and recognition.

webservice

- webservice uses the MQTT broker to interact with cvservice. It's an application based on Node.js* for providing visual feedback at the user access station. Users are greeted when recognized as authorized users or given the option to register as a new user. It displays a high-quality, low-latency motion jpeg stream along with the user interface and data analytics.

In the UI, there are three tabs:

- live streaming video

- user registration

- analytics of access history.

This is what the live streaming video tab looks like:

This is what the user registration tab looks like:

This is an example of the analytics tab:

Hardware requirements

- 5th Generation Intel® Core™ processor or newer or Intel® Xeon® v4, or Intel® Xeon® v5 Processors with Intel® Graphics Technology (if enabled by OEM in BIOS and motherboard) [tested on NUC6i7KYK]

- USB Webcam [tested with Logitech* C922x Pro Stream]

Software requirements

- Ubuntu* 16.04

- OpenVINO™ toolkit

This article continues here on GitHub.

IoT Reference Implementation: People Counter

What it Does

This people counter application is one of a series of IoT reference implementations aimed at instructing users on how to develop a working solution for a particular problem. It demonstrates how to create a smart video IoT solution using Intel® hardware and software tools. This people counter solution detects people in a designated area providing number of people in the frame, average duration of people in frame, and total count.

How it Works

The counter uses the Inference Engine included in the OpenVINO™ and the Intel® Deep Learning Deployment Toolkit. A trained neural network detects people within a designated area by displaying a green bounding box over them. It counts the number of people in the current frame, the duration that a person is in the frame (time elapsed between entering and exiting a frame), and the total number of people seen, and then sends the data to a local web server using the Paho* MQTT C client libraries.

Requirements

Hardware

- 6th Generation Intel® Core™ processor with Intel® Iris® Pro graphics and Intel® HD Graphics.

Software

- Ubuntu* 16.04 LTS Note: You must be running kernel version 4.7+ to use this software. We recommend using a 4.14+ kernel to use this software. Run the following command to determine your kernel version:

uname -a

- OpenCL™ Runtime Package

- OpenVINO™

Visit Project on GitHub

How to Install and Use Intel® VTune™ Amplifier Platform Profiler

Try Platform Profiler Today

You are invited to try a free technical preview release. Just follow these simple steps (if you are already registered, skip to step 2):

- Register for the Intel® Parallel Studio XE Beta

- Download and install (the Platform Profiler is a separate download from the Intel Parallel Studio XE Beta)

- Check out the getting started guide, then give Platform Profiler a test drive

- Fill out the online survey

Introduction

Intel® VTune™ Amplifier - Platform Profiler, currently available as a technology preview, is a tool that helps users to identify how well an application uses the underlying architecture and how users can optimize hardware configuration of their system. It displays high-level system configuration such as processor, memory, storage layout, PCIe* and network interfaces (see Figure 1), as well as performance metrics observed on the system such as CPU and memory utilization, CPU frequency, cycles per instruction (CPI), memory and disk input/output (I/O) throughput, power consumption, cache miss rate per instruction, and so on. Performance metrics collected by the tool can be used for deeper analysis and optimization.

There are two primary audiences for Platform Profiler:

- Software developers - Using performance metrics provided by the tool, developers can analyze the behavior of their workload across various platform components such as CPU, memory, disk, and network devices.

- Infrastructure architects - You can monitor your hardware by analyzing long collection runs and finding times when the system exhibits low performance. Moreover, you can optimize the hardware configuration of your system based on the tool findings. For example, if after running a mix of workloads, Platform Profiler shows high processor utilization, memory use, or that I/O is limiting the application performance, you can bring in more cores, more memory, or use more or faster I/O devices.

Figure 1. High-level system configuration view from Platform Profiler

Figure 1. High-level system configuration view from Platform Profiler

The main difference between Platform Profiler and other VTune Amplifier analyses is that Platform Profiler helps profile a platform for longer periods of time incurring very little performance overhead and generating a small amount of data. The current version of Platform Profiler can run up to 13 hours and generate less data than VTune Amplifier would do in 13 hours. So, one can simply start Platform Profiler running, and keep it running for 13 hours, meanwhile utilizing the system as he or she prefers, then stopping Platform Profiler profiling. Platform Profiler will collect all the profiled data and display the system utilization diagrams. VTune Amplifier, on the other hand, cannot run for such a long period of time, since it generates profiled data of gigabytes of magnitude in a matter of minutes. Thus, VTune Amplifier is more appropriate for fine tuning or for analyzing an application rather than a system. How well is my application using the machine or perhaps How well is the machine being used are key questions that Platform Profiler can answer. But, How do I fix my application to use the machine better is the question that VTune Amplifier answers.

Platform Profiler is composed of two main components: a data collector and a server.

- Data Collector - is a standalone package that needs to be installed on profiled system. It collects system-level hardware and operating system performance counters.

- Platform Profiler Server - post-processes the collected data into a time-series database, correlates with system topology information, and displays topology diagrams and performance graphs using a web-based interface.

Tool Installation

In order to use Platform Profiler, one needs to install both the server and the data collector components first. Below are the steps on how to do the installation.

Installing the Server Component

- Copy the server package to the system on which you want to install the server.

- Extract the archive to a writeable directory.

- Run the setup script and follow the prompts. On Windows*, run the script using the Administrator Command Prompt. On Linux*, use an account with root (“sudo”) privileges.

Linux example: ./setup.sh

Windows example: setup.bat

By default, the server is installed in the following location:

- On Linux: /opt/intel/vpp

- On Windows: C:\Program Files(x86)\IntelSWTools\VTune Amplifier Platform Profiler

Installing the Data Collector Component

- Copy the collector package to the target system on which you want to collect platform performance data.

- Extract the archive to a writeable directory on the target system.

- Run the setup script and follow the prompts. On Windows, run the script using the Administrator Command Prompt. On Linux, use an account with root (“sudo”) privileges.

Linux example: ./setup

Windows example: setup.cmd

By default, the collectors are installed in the following location:

- On Linux: /opt/intel/vpp-collector

- On Windows: C:\Intel\vpp-collector

Tool Usage

Starting and Stopping the Server Component

On Linux:

- Run the following commands to start the server manually after initial installation or a system reboot:

- source ./vpp-server-vars.sh

- vpp-server-start

- Run the following commands to stop the server:

- source ./vpp-server-vars.sh

- vpp-server-stop

On Windows:

- Run the following commands to start the server manually after initial installation or a system reboot:

- vpp-server-vars.cmd

- vpp-server-start

- Run the following command to stop the server:

- vpp-server-vars.cmd

- vpp-server-stop

Collecting System Data

Collecting data using Platform Profiler is pretty straight forward. Below are the steps one needs to take to collect data using the tool:

- Setup the environment:

- On Linux: source /opt/intel/vpp-collector/vpp-collect-vars.sh

- On Windows: C:\Intel\vpp-collector\vpp-collect-vars.cmd

- Start the data collection: vpp-collect-start [-c “workload description – free text comment”].

- Optionally, you can also add timeline markers to distinguish the time periods between collections: vpp-collect-mark [“an optional label/text/comment”].

- Stop the data collection: vpp-collect-stop. After the collection is stopped, the compressed result file is stored in the current directory.

Note: Inserting timeline markers is useful when you leave Platform Profiler running for a long period of time. For example, you run the Platform Profiler collection for 13 hours straight. During these 13 hours you run various stress tests and would like to find out how each test affects the system. In order to distinguish the time between these tests, you may want to use the timeline markers.

View Results

- From the machine on which the server is installed, point your browser (Google Chrome* recommended) to the server home page: http://localhost:6543.

- Click “View Results”.

- Click the Upload button and select the result file to upload.

- Select the result from the list to open the viewer.

- Navigate through the result to identify areas for optimization.

Tool Demonstration

In the rest of the article, I demonstrate how to navigate and analyze the result data it collects. I use a movie recommendation system application as an example in this article. The movie recommendation code is obtained from the Spark* Training GitHub* website. The underlying platform is a two-socket Haswell server (Intel® Xeon® CPU E5-2699 v3) with Intel® Hyper-Threading Technology enabled, 72 logical cores, 64 GB of memory, running an Ubuntu* 14.04 operating system.

The code is run in Spark on a single node as follows:

spark-submit --driver-memory 2g --class MovieLensALS --master local[4] movielens-als_2.10-0.1.jar movies movies/test.dat

With the command line above, Spark runs in local mode with four threads specified with the --master local[4] option. In local mode there is only one driver, which acts as an executor, and the executor spawns the threads to execute tasks. There are two arguments that can be changed before launching the application which are driver memory (--driver-memory 2g) and number of threads to run with (local[4]). My goal is to see how much I stress my system by changing these arguments, and to find out if I can identify any interesting pattern happening during the execution using Platform Profiler's profiled data.

Here are the four test cases that were run and their corresponding run times:

spark-submit --driver-memory 2g --class MovieLensALS --master local[4] movielens-als_2.10-0.1.jar movies movies/test.dat (16 minutes 11 seconds)

spark-submit --driver-memory 2g --class MovieLensALS --master local[36] movielens-als_2.10-0.1.jar movies movies/test.dat (11 minutes 35 seconds)

spark-submit --driver-memory 8g --class MovieLensALS --master local[36] movielens-als_2.10-0.1.jar movies movies/test.dat (7 minutes 40 seconds)

spark-submit --driver-memory 16g --class MovieLensALS --master local[36] movielens-als_2.10-0.1.jar movies movies/test.dat (8 minutes 14 seconds)

Figures 2 and 3 show observed CPU metrics during the first and second tests, respectively. Figure 2 shows that the CPU is underutilized and the user can add more work, if the rest of the system is similarly underutilized. The CPU frequency slows down often, supporting the thesis that the CPU will not be the limiter of performance. Figure 3 shows that the test utilizes the CPU more due to an increase in number of threads, but there is still significant headroom. Moreover, it is interesting to see that by increasing the number of threads we also decreased the CPI rate, as shown in the CPI chart of Figure 3.

Figure 2. Overview of CPU usage in Test 1.

Figure 3. Overview of CPU usage in Test 2.

Figure 4. Memory read/write throughput on Socket 0 for Test 1.

The increase in number of threads also increased the number of memory accesses, when you compare Figures 4 and 5. This is an expected behavior and is verified by the data collected by Platform Profiler.

Figure 5. Memory read/write throughput on Socket 0 for Test 2.

Figures 6 and 7 show L1 and L2 miss rates per instruction for Tests 1 and 2, respectively. Increasing the number of threads in Test 2 drastically decreased L1 and L2 miss rates, as depicted in Figure 7. We found out that the application incurs less CPI rate and less L1 and L2 miss rate when you run the code with more threads, which means that once data is loaded from the memory to caches a fairly good amount of data reuse happens, which benefits the overall performance.

Figure 6. L1 and L2 miss rate per instruction for Test 1.

Figure 7. L1 and L2 miss rate per instruction for Test 2.

Figure 8 shows the memory usage chart for Test 3. Similar memory usage patterns are observed for all other tests as well; that is, used memory is between 15-25 percent, whereas cached memory is between 45-60 percent. Spark caches its intermediate results in memory for later processing, hence we see high utilization of cached memory.

Figure 8. Memory utilization overview for Test 3.

Figure 8. Memory utilization overview for Test 3.

Finally, Figures 9-12 show the disk utilization overview for all four test runs. As the amount of work has increased across the four test runs, the data shows that a faster disk improves the performance of the tests. The number of bytes transacted is not a lot, but the I/O operations (iops) are spending significant time waiting for completion. This can be seen by the Queue Depth chart. If the user is unable to change the disk then adding more threads would help to tolerate the disk access latency.

Figure 9. Disk utilization overview for Test 1.

Figure 10. Disk utilization overview for Test 2.

Figure 11. Disk utilization overview for Test 3.

Figure 12. Disk utilization overview for Test 4.

Summary

Using Platform Profiler, I was able to understand the execution behavior of the movie recommendation workload and observe how certain performance metrics change across a different number of threads and driver memory settings. Moreover, I was surprised to find out that a lot of disk write operations happen during the execution of the workload, since Spark applications are designed to run in memory. In order to investigate the code further, I will proceed with running VTune Amplifier's Disk I/O analysis to find out the details behind the disk I/O performance.

Downpour Interactive* Is Bringing Military Sims to VR with Onward*

The original article is published by Intel Game Dev on VentureBeat*: Downpour Interactive is bringing military sims to VR with Onward. Get more game dev news and related topics from Intel on VentureBeat.

While there's no dearth of VR (virtual reality) shooters out there, military simulation games enthusiasts searching for a VR version of Arma* or Rainbow Six*, where tactics trump flashy firefights, don't have many options. Downpour Interactive* founder Dante Buckley was one such fan, and when he decided to make his first game, Onward*, after a stint in hardware development, he knew it had to be a military simulation.

Onward pits squads of MARSOC (Marine Corps Forces Special Operations Command) marines against their opponents, Volg, in tactical battles across cities and deserts. Like Counter-Strike* or Socom*, players are limited to one life, with no respawns. While players can heal each other, when they're dead, that's it. "It's a style I really loved growing up," Buckley says. "And I wanted to bring that to VR and make sure it doesn't get forgotten in this next generation of games."

Onward goes several steps further, however. There's no screen clutter, for one. The decision to eschew a HUD (head-up display) was an easy one for Buckley to make, and one that made sense for the game as both a VR title and a military simulation.

"You have to look to see who's on your team. There's no identification, no things hovering over their heads, so you have to get used to their voices and play-styles. You have to use the radio to communicate with your team and keep aware of what's going on. It just forces people to play differently than they're used to. So not having a HUD was a design choice made early on."

Playing Dead

Instead of using a mini-map or radar to identify enemies, players have to listen for hints that the enemy might be right around the corner or hiding just behind a wall. When an ally is shot, you can't just look at a health bar, so you need to check their pulse to see whether they're just knocked down — and thus can be healed and brought back into the fight — or dead.

"We wanted to encourage players to interact with each other like they would in the real world," Buckley explains, "rather than just checking their HUD."

Buckley wants the mixture of immersion and a lack of hand-holding to inspire more player creativity. He cites things like players laying on the ground and playing dead. Enemies ignore them and, when their backs are turned, the not-at-all-dead players get up and take them out. You've got to be a little paranoid when you see a body, then.

When Buckley started working on Onward, he was doing it all himself, but last year he was able to hire a team of four. The extra hands mean that a lot of the existing game is being improved upon, from the maps to the guns.

"We're still working on updating some things. We had to use asset stores to create these maps. When I first started, it was just me — I had to balance out programming and map design and sound design, so using asset stores like Unity* and other places was really helpful in fast-tracking the process and getting a vision set early on. But now that I have a team, we're going back and updating things and making them higher quality."

The addition of a weapon artist has been a particular boon, says Buckley. "When you could first play the game in August 2016, the weapons looked like crap. Now an awesome weapon artist has joined us and he's going back through the game updating each weapon. Things are looking really good now."

Beyond making them look good, Buckley also wants to ensure that each weapon handles realistically. He's used feedback from alpha testers, marines, and his own experiences from gun ranges to create the firearms.

Tools of the Trade

"There are different ergonomics for each weapon," he explains. And they've got different grips and reload styles. "That's something to consider that most non-VR games don't. With other games you just click ‘R' to reload. You never really need to think about how you hold it or reload it. That's something cool that we've translated from the real-world."

It's an extra layer of interaction that you can even see in the way that you use something like a pair of night vision goggles. Instead of just hitting a button, you have to actually grab the night vision goggles that are on your head and pull them down over your face. And when you're done with them, you need to lift the goggles up.

All of these tools and weapons are selected in the lobby, before a match. Players can pick their primary weapon — pistol, gear like grenades or syringes, and finally weapon attachments such as scopes, suppressors, and various foregrips. Everyone has access to the same equipment. There's a limit to how much you can add to your loadout — everyone gets 8 points to distribute as they see fit — but that's the only restriction.

"That's something I wanted to make sure was in the game," says Buckley. "I know a lot of games have progression systems, which are fun, but it can kill the skill-based aspect of some games, where the more you play, the more stuff you get, versus getting better."

The problem right now is that the haptics can't match Buckley's ambitions. Since the controllers aren't in the shape of weapons, and they only vibrate, it's hard to forget that you're just holding some weird-looking controllers. But there are people and companies creating peripherals that the controllers can be slotted into, calling to mind Light gun shooter.

Onward has been on Steam* Early Access since August 2016, and Buckley considers it an overwhelmingly positive experience. "I can't say many negative things about it. It's been awesome. I'm super thankful because it gives me and my team an opportunity to show the world that we can make something big, and improve on it. Under any other circumstances, if there wasn't an avenue like this to make games and get them out into the world, a game like Onward might not have existed."

The early release has also allowed Buckley to watch a community grow around the game, which he thinks is helped by VR. "What makes us feel more together is that we can jump into VR and see each other, and see expressive behavior. You can see body language in-game; it's more than you get with a mic and a keyboard. Since people can emote how they're feeling, it's a little bit more real."

The end of this phase is almost in sight, however. Onward will launch by the end of the year, and Buckley promises some big announcements before then. In the meantime, you can play the early access version on Steam*.

CardLife*'s Liberating World is a Game Inside a Cardboard Creation Tool

The original article is published by Intel Game Dev on VentureBeat*: CardLife’s liberating world is a game inside a cardboard creation tool. Get more game dev news and related topics from Intel on VentureBeat.

With Robocraft*, its game of battling robots, Freejam* made its love for user-generated content known. With its latest game, the studio is going back to the ultimate source of user-generated content: the humble sheet of cardboard. Not so humble now, however, when it's used to craft an entire multiplayer survival game filled with mechs, dragons, and magic.

It started as a little prototype with a simple goal conceived by Freejam's CEO Mark Simmons: explore your creativity with cardboard. He and game director Rich Tyrer had chatted about it, thrown around more ideas, and they eventually tossed their creation out into the wild. "We had a basic cardboard world with a cardboard cabin and tools," recalls Tyrer. "We released it online just to see what would happen. The point was to see what people could do with their creativity with this cardboard and these two tools, a saw and a hacksaw."

Even with just a couple of tools, there were a lot of things that could be done with the cardboard — especially since the scale could be manipulated — and its potential was enough for a Freejam team to be set up a month later to properly develop it. "We went from there and molded it into this game. We started thinking about what genre would be good for the aesthetic, how could we let people make what they want? In our minds, the cardboard aesthetic was like being a child and building a castle out of a cardboard box. You fill the blanks. That's what it came down to."

The open-world multiplayer game itself, however, is not the end-point of the CardLife* concept. "The CardLife game is more of an example of what you can do in cardboard. We want to use that to create this cardboard platform where people can create things and do lots of modding. That's why it's UGC (user-generated content) focused and really customizable."

The Only Rule of CardLife

Freejam's goal is to create a platform where people can create whatever they want, both within the CardLife game and by creating mods simply by using Notepad* and Paint*, as long as those things are cardboard. Tyrer uses the example of Gears of War*. If a player wants to totally recreate Epic*'s shooter, they should just use Unreal*. If they want to make it out of cardboard though, then they can absolutely do that. That's the only creative limitation Tyrer wants to impose.

He stresses that, in terms of accessibility, he wants it to be easy enough for kids to use. "The 3D modeling for kids moniker is more about the barrier to entry and how difficult it is for someone to make a mod. We want it to be low, whether they want to make a driving version, a fighting game, or they just want to add more creatures." Everything in the game is essentially just a collection of 2D shapes that can be found as PNG files in the game folder. Making a new character model is as simple as drawing different shapes in Paint and putting them together.

CardLife's customization isn't limited to modding, however, and whenever players craft an item, a vehicle, or even a dragon, they're able to completely transform their cardboard creation. The system is named for and inspired by connecting the dots. It's a way for kids to create art by filling in the blanks, and that's very much the feel that CardLife is trying to capture by letting you alter the cardboard silhouette of a helicopter or a monster. It's what's ultimately happening when the world gets changed by players terraforming areas, too.

The less noticeable mechanisms and hidden pieces of card take care of themselves. "If you wanted to put big spikes on the back, you can drastically change this central piece of card on the back and make it their own," explains Tyrer. "But a small disc underneath the seat that nobody really sees, there's no point in someone drawing that. It just inherits the scale of all the other pieces that you've drawn. And you can see in the 3D preview how your pieces of card have had an effect."

Parts can be mirrored and duplicated as well, excising some of the busywork. If you're making a new character and give them a leg, you don't then have to make the second leg; the game will create a new leg for you. This, Tyrer hopes, will free people up to just focus on making cool things and exploring their creativity. He doesn't want the art and the gameplay to get in each other's way, so no matter how ridiculous your creation looks, it's still perfectly viable in combat.

Beautiful but Deadly

"We learned in Robocraft that art bots, as in bots that are aesthetically pleasing, generally don't perform well compared to very Robocraft-y bots, which is usually a block with loads of guns on top, because of the way the damage model works. So it's quite hard, for example, so make something that looks like SpongeBob SquarePants* be an effective robot. With CardLife, we wanted to make sure that people would always be able to creatively express themselves without having to worry about the gameplay implications."

PvE servers will be added in an update, but combat and PvP will remain an important part of CardLife. Tyrer envisions big player sieges, with large fortresses made out of cardboard being assaulted by Di Vinci-style helicopters, wizards, and soldiers carrying future-tech. Craftable items are split into technological eras, all the way up to the realms of science-fiction, but there's magic too, and craftable spells.

Tyrer sees cardboard as liberating, freeing him from genre and setting conventions. "If you're making an AAA sci-fi game, you can't put dragons in it. The parameters of what you can add to the game are set by the pre-existing notions of what sci-fi is. And you can see that in Robocraft. But with cardboard… if I'm a child playing with a cardboard box, nobody is going to pop up and tell me I can't have a dragon fight a mech. I can. That's the beauty of it."

Rather than standing around waiting for tech to get researched, discovering new recipes comes down to simply exploring and digging into the game. Sometimes literally. "It's more like real life," explains Tyrer. "As you find new materials and put them into your crafting forge, it will give you different options to make things. As you dig deeper into the ground and find rare ores, those ores will be associated with different recipes, and those recipes will then allow you to make stronger items."

To Infinity

Unlike its fellow creative sandbox Minecraft*, CardLife's world is not procedural. It is finite and designed in a specific way. If you're standing beneath a huge mountain in one server and go to another, you'll be able to find that very same mountain. It might look different, however, as these worlds are still dynamic and customizable. But they can also be replenished.

"If you want to keep a finite world running for an infinite amount of time, it needs to be replenished," says Tyrer. "Structures of lapsed players will decay, natural disasters will refill caves or reform mountains, and that's our way of making the world feel infinite. We can have a world that's shifting and changing but also one that people can get to know and love."

What's there right now, however, is just an early access placeholder. CardLife's moving along swiftly, though. A large update is due around the beginning of March, potentially earlier, that will introduce armor and weapon stats, building permissions so that you can choose who can hang out in or edit your structure, and item durability. And then another update is due out two months after that.

The studio isn't ready to announce a release date yet, and it's still busy watching its early access test bed and planning new features. More biomes are on the cards, as well as oceans that can be explored. "No avenue is closed," says Tyrer. And that includes platforms, so a console release isn't out of the question. There's even the cardboard connection between this and the Switch* via Nintendo*'s Labo construction kit, though Tyrer only laughs at the suggestion they'd be a good fit. It's not a 'no'.

"We just want to take all the cool things you can think of, put it in a pot, and mix it around."

CardLife is available at Freejam* now and is coming to Steam* soon.

The Path to Production IoT Development

Billions of devices are connecting to the Internet of Things (IoT) every year, and it isn’t happening magically. On the contrary, a veritable army of IoT developers is painstakingly envisioning and prototyping these Internet of Things “things”—one thing at a time. And, in many cases, they are guiding these IoT solutions from the drawing board to commercial production through an incredibly complex gauntlet.

Building a working prototype can be a challenging engineering feat, but it’s the decision-making all along the way that can be especially daunting. Remember that famous poem by Robert Frost, The Road Not Taken, in which the traveler comes to a fork in the road and dithers about which way to go? Just one choice? Big deal. Consider the possible divergent paths in IoT development:

- Developer kits– “out-of-the-box” sets of hardware and software resources to build prototypes and solutions.

- Frameworks– platforms that identify key components of an IoT solution, how they work together, and where essential capabilities need to reside.

- Sensors– solution components that detect or measure a physical property and record, indicate, or otherwise respond to it.

- Hardware– everything from the circuit boards, sensors, and actuators in an IoT solution to the mobile devices and servers that communicate with it.

- IDEs – Integrated Development Environments are software suites that consolidate the basic tools IoT developers need to write and test software.

- Cloud – encompasses all the shared resources—applications, storage and compute—that are accessible via the web to augment the performance, security and intelligence of IoT devices.

- Security– this can be multilayered from the “thing” to the network to the cloud

- Analytics– comprises all the logic on board the device, on the network and in the cloud that is required to turn an IoT solution’s raw data streams into decisions and action.

Given all the technologies to choose from that are currently on the market, the possible combinations are infinite. How well those combinations mesh is critical for an efficient development process. And efficiency matters now more than ever—especially in industries and areas of IoT development where the speed of innovation is off the charts.

One area of IoT development where the pace of innovation is quickly taking off is at the intersection of the Internet of Things and Artificial Intelligence (AI). It’s where developers are intent upon driving towards a connected and autonomous world. Whether connecting the unconnected, enabling smart devices, or eventually creating networked autonomous things on a large scale, developers are being influenced by ever-increasing compute performance and monitoring needs. They are responding by designing AI capabilities into highly integrated IoT solutions that are spanning the extended network environment: paving the way to solve highly complex challenges in areas of computer vision, edge computing, and automated manufacturing processes. The results are already making their presence known in enhanced retail customer experiences, beefed up security in cities, and better forecasting of events and human behaviors of all kinds.

For IoT developers, AI represents intriguing possibilities and much more: more tools, more knowledge, and more decision-making. Also, it adds complexity and can slow time to market. But the silver lining is that you don’t have to figure out AI or any aspect of IoT development all by yourself.

Intel stands ready to help with technology, expertise, and support—everything from scalable developer kits to specialized, industry-focused, use-case-driven kits and more. In fact, Intel offers more than 6,000 IoT solutions, from components and software to systems and services—all optimized to work together to sustain developers as their solutions evolve from prototype to production

We take a practical, prescriptive approach to IoT development—helping you obtain the precise tools and training you need to realize your IoT ambitions. And we can assist you in fulfilling the specialized requirements of your IoT solution design today, while anticipating future requirements so you don’t have to.

Take your first step on the path to straightforward IoT development at https://software.intel.com/iot.

Battery-Powered Deep Learning Inference Engine

Improving visual perception of edge devices

LiPo batteries (lithium polymer batteries) and embedded processors are a boon to the Internet of Things (IoT) market. They have enabled IoT device manufacturers to pack more features and functionalities into mobile edge devices, while still providing a long runtime on a single charge. The advancement in sensor technology, especially vision-based sensors, and software algorithms that process large amount of data generated by these sensors has spiked the need for better computational performance without compromising on battery life or real-time performance of these mobile edge devices.

The Intel® Movidius™ Visual Processing Unit (Intel® Movidius™ VPU) provides real-time visual computing capabilities to battery-powered consumer and industrial edge devices such as Google Clips, DJI® Spark drone, Motorola® 360 camera, HuaRay® industrial smart cameras, and many more. In this article, we won’t replicate any of these products, but we will build a simple handheld device that uses deep neural networks (DNN) to recognize objects in real-time.

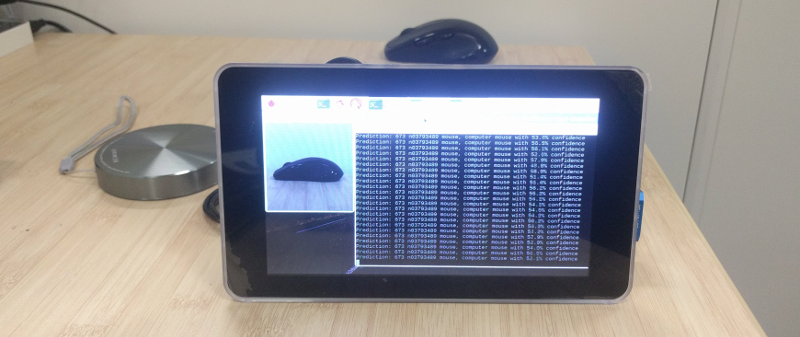

|

|---|

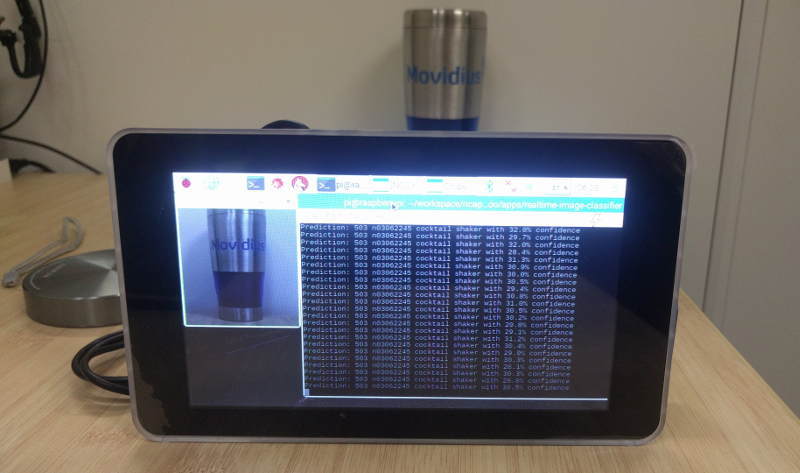

| The project in action |

Practical learning!

You will build…

A battery-powered DIY handheld device, with a camera and a touch screen, that can recognize an object when pointed toward it.

You will learn…

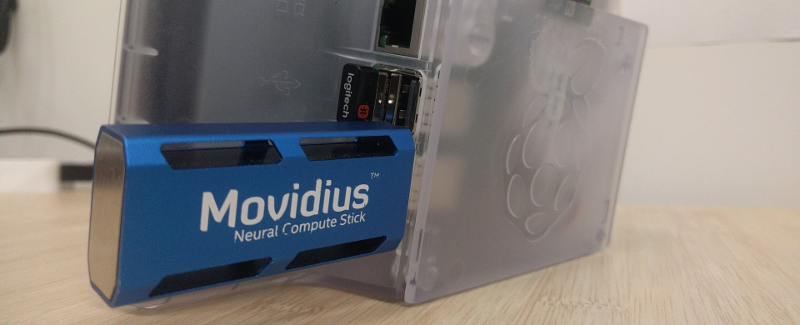

- How to create a live image classifier using Raspberry Pi* (RPi) and the Intel® Movidius™ Neural Compute Stick (Intel® Movidius™ NCS)

You will need…

- An Intel Movidius Neural Compute Stick - Where to buy

- A Raspberry Pi 3 Model B running the latest Raspbian* OS

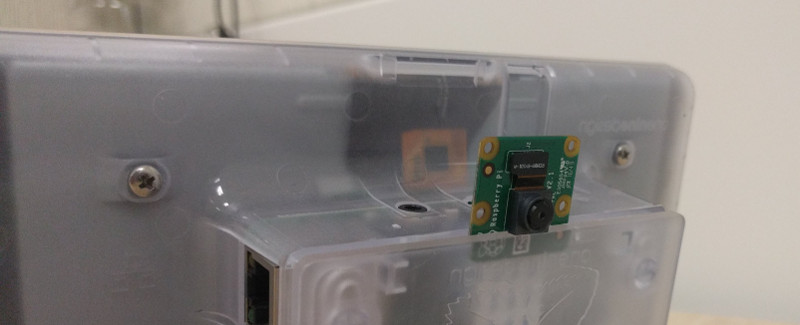

- A Raspberry Pi camera module

- A Raspberry Pi touch display

- A Raspberry Pi touch display case [Optional]

- Alternative option - Pimoroni® case on Adafruit

If you haven’t already done so, install the Intel Movidius NCSDK on your RPi either in full SDK or API-only mode. Refer to the Intel Movidius NCS Quick Start Guide for full SDK installation instructions, or Run NCS Apps on RPi for API-only.

Fast track…

If you would like to see the final output before diving into the detailed steps, download the code from our sample code repository and run it.

mkdir -p ~/workspace

cd ~/workspace

git clone https://github.com/movidius/ncappzoo

cd ncappzoo/apps/live-image-classifier

make runThe above commands must be run on a system that runs the full SDK, not just the API framework. Also make sure a UVC camera is connected to the system (a built-in webcam on a laptop will work).

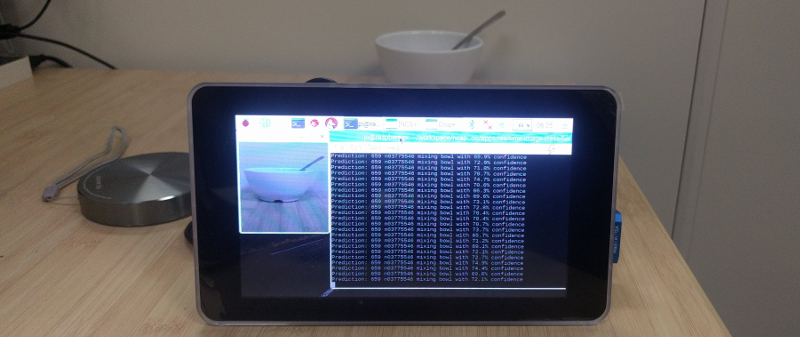

You should see a live video stream with a square overlay. Place an object in front of the camera and align it to be inside the square. Here’s a screenshot of the program running on my system.

Let’s build the hardware

Here is a picture of how the hardware setup turned out:

Step 1: Display setup

Touch screen setup: follow the instructions on element14’s community page.

Rotate the display: Depending on the display case or stand, your display might appear inverted. If so, follow these instructions to rotate the display 180°.

sudo nano /boot/config.txt

# Add the below line to /boot/config.txt and hit Ctrl-x to save and exit.

lcd_rotate=2

sudo rebootSkip step 2 if you are using a USB camera.

Step 2: Camera setup

Enable CSI camera module: follow instructions on the official Raspberry Pi documentation site.

Enable v4l2 driver: For reasons unknown, Raspbian does not load V4L2 drivers for CSI camera modules by default. The example script for this project uses OpenCV-Python, which in turn uses V4L2 to access cameras (via /dev/video0), so we will have to load the V4L2 driver.

sudo nano /etc/modules

# Add the below line to /etc/modules and hit Ctrl-x to save and exit.

bcm2835-v4l2

sudo rebootLet’s code

Being a big advocate of code reuse, so most of the Python* script for this project has been pulled from this previous article, ‘Build an image classifier in 5 steps’. The main difference is that we have moved each ‘step’ (sections of the script) into its own function.

The application is written in such a way that you can run any classifier neural network without having to make much change to the script. The following are a few user-configurable parameters:

GRAPH_PATH: Location of the graph file, against which we want to run the inference- By default it is set to

~/workspace/ncappzoo/tensorflow/mobilenets/graph

- By default it is set to

CATEGORIES_PATH: Location of the text file that lists out labels of each class- By default it is set to

~/workspace/ncappzoo/tensorflow/mobilenets/categories.txt

- By default it is set to

IMAGE_DIM: Dimensions of the image as defined by the choosen neural network- ex. MobileNets and GoogLeNet use 224x224 pixels, AlexNet uses 227x227 pixels

IMAGE_STDDEV: Standard deviation (scaling value) as defined by the choosen neural network- ex. GoogLeNet uses no scaling factor, MobileNet uses 127.5 (stddev = 1/127.5)

IMAGE_MEAN: Mean subtraction is a common technique used in deep learning to center the data- For ILSVRC dataset, the mean is B = 102 Green = 117 Red = 123

Before using the NCSDK API framework, we have to import mvncapi module from mvnc library:

import mvnc.mvncapi as mvncIf you have already gone through the image classifier blog, skip steps 1, 2, and 5.

Step 1: Open the enumerated device

Just like any other USB device, when you plug the NCS into your application processor’s (Ubuntu laptop/desktop) USB port, it enumerates itself as a USB device. We will call an API to look for the enumerated NCS device, and another to open the enumerated device.

# ---- Step 1: Open the enumerated device and get a handle to it -------------

def open_ncs_device():

# Look for enumerated NCS device(s); quit program if none found.

devices = mvnc.EnumerateDevices()

if len( devices ) == 0:

print( 'No devices found' )

quit()

# Get a handle to the first enumerated device and open it.

device = mvnc.Device( devices[0] )

device.OpenDevice()

return deviceStep 2: Load a graph file onto the NCS

To keep this project simple, we will use a pre-compiled graph of a pre-trained GoogLeNet model, which was downloaded and compiled when you ran make inside the ncappzoo folder. We will learn how to compile a pre-trained network in another blog, but for now let’s figure out how to load the graph into the NCS.

# ---- Step 2: Load a graph file onto the NCS device -------------------------

def load_graph( device ):

# Read the graph file into a buffer.

with open( GRAPH_PATH, mode='rb' ) as f:

blob = f.read()

# Load the graph buffer into the NCS.

graph = device.AllocateGraph( blob )

return graphStep 3: Pre-process frames from the camera

As explained in the image classifier article, a classifier neural network assumes there is only one object in the entire image. This is hard to control with a LIVE camera feed, unless you clear out your desk and stage a plain background. In order to deal with this problem, we will cheat a little bit. We will use OpenCV API to draw a virtual box on the screen and ask the user to manually align the object within this box; we will then crop the box and send the image to NCS for classification.

# ---- Step 3: Pre-process the images ----------------------------------------

def pre_process_image():

# Grab a frame from the camera.

ret, frame = cam.read()

height, width, channels = frame.shape

# Extract/crop frame and resize it.

x1 = int( width / 3 )

y1 = int( height / 4 )

x2 = int( width * 2 / 3 )

y2 = int( height * 3 / 4 )

cv2.rectangle( frame, ( x1, y1 ) , ( x2, y2 ), ( 0, 255, 0 ), 2 )

cv2.imshow( 'NCS real-time inference', frame )

cropped_frame = frame[ y1 : y2, x1 : x2 ]

cv2.imshow( 'Cropped frame', cropped_frame )

# Resize image [image size if defined by chosen network during training].

cropped_frame = cv2.resize( cropped_frame, IMAGE_DIM )

# Mean subtraction and scaling [a common technique used to center the data].

cropped_frame = cropped_frame.astype( numpy.float16 )

cropped_frame = ( cropped_frame - IMAGE_MEAN ) * IMAGE_STDDEV

return cropped_frameStep 4: Offload an image/frame onto the NCS to perform inference

Thanks to the high-performance and low-power consumption of the Intel Movidius VPU, which is in the NCS, the only thing that Raspberry Pi has to do is pre-process the camera frames (step 3) and shoot it over to the NCS. The inference results are made available as an array of probability values for each class. We can use argmax() to determine the index of the top prediction and pull the label corresponding to that index.

# ---- Step 4: Offload images, read and print inference results ----------------

def infer_image( graph, img ):

# Read all categories into a list.

categories = [line.rstrip('\n') for line in

open( CATEGORIES_PATH ) if line != 'classes\n']

# Load the image as a half-precision floating point array.

graph.LoadTensor( img , 'user object' )

# Get results from the NCS.

output, userobj = graph.GetResult()

# Find the index of highest confidence.

top_prediction = output.argmax()

# Print top prediction.

print( "Prediction: " + str(top_prediction)

+ "" + categories[top_prediction]

+ " with %3.1f%% confidence" % (100.0 * output[top_prediction] ) )

returnIf you are interested to see the actual output from NCS, head over to

ncappzoo/apps/image-classifier.pyand make this modification:

# ---- Step 4: Read and print inference results from the NCS -------------------

# Get the results from NCS.

output, userobj = graph.GetResult()

# Print output.

print( output )

...

# Print top prediction.

for i in range( 0, 4 ):

print( "Prediction " + str( i ) + ": " + str( order[i] )

+ " with %3.1f%% confidence" % (100.0 * output[order[i]] ) )

...When you run this modified script, it will print out the entire output array. Here’s what you will get when you run an inference against a network that has 37 classes, notice the size of the array is 37 and the top prediction (73.8%) is in the 30th index of the array (7.37792969e-01).

[ 0.00000000e+00 2.51293182e-04 0.00000000e+00 2.78234482e-04

0.00000000e+00 2.36272812e-04 1.89781189e-04 5.07831573e-04

6.40749931e-05 4.22477722e-04 0.00000000e+00 1.77288055e-03

2.31170654e-03 0.00000000e+00 8.55255127e-03 6.45518303e-05

2.56919861e-03 7.23266602e-03 0.00000000e+00 1.37573242e-01

7.32898712e-04 1.12414360e-04 1.29342079e-04 0.00000000e+00

0.00000000e+00 0.00000000e+00 6.94580078e-02 1.38878822e-04

7.23266602e-03 0.00000000e+00 7.37792969e-01 0.00000000e+00

7.14659691e-05 0.00000000e+00 2.22778320e-02 9.25064087e-05

0.00000000e+00]

Prediction 0: 30 with 73.8% confidence

Prediction 1: 19 with 13.8% confidence

Prediction 2: 26 with 6.9% confidence

Prediction 3: 34 with 2.2% confidenceStep 5: Unload the graph and close the device

In order to avoid memory leaks and/or segmentation faults, we should close any open files or resources and deallocate any used memory.

# ---- Step 5: Unload the graph and close the device -------------------------

def close_ncs_device( device, graph ):

cam.release()

cv2.destroyAllWindows()

graph.DeallocateGraph()

device.CloseDevice()

returnCongratulations! You just built a DNN-based live image classifier.

The following pictures are of this project in action

|

|---|

| NCS and a wireless keyboard dongle plugged directly to RPI. |

|

|---|

| RPi camera setup |

|

|---|

| Classifying a bowl |

|

|---|

| Classifying a computer mouse |

Further experiments

- Port this project onto a headless system like RPi Zero* running Raspbian Lite*.

- This example script uses MobileNets to classify images. Try flipping the camera around and modifying the script to classify your age and gender.

- Hint: Use graph files from ncappzoo/caffe/AgeNet and ncappzoo/caffe/GenderNet.

- Convert this example script to do object detection using ncappzoo/SSD_MobileNet or Tiny YOLO.

Further reading

- @wheatgrinder, an NCS community member, developed a system where live inferences are hosted on a local server, so you can stream it through a web browser.

- Depending on the number of peripherals connected to your system, you many notice throttling issues as mentioned by @wheatgrinder in his post. Here’s a good read on how he fixed the issue.

Using and Understanding the Intel® Movidius™ Neural Compute SDK

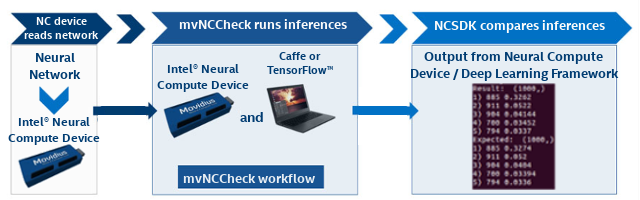

The Intel® Movidius™ Neural Compute Software Development Kit (NCSDK) comes with three tools that are designed to help users get up and running with their Intel® Movidius™ Neural Compute Stick: mvNCCheck, mvNCCompile, and mvNCProfile. In this article, we will aim to provide a better understanding of how the mvNCCheck tool works and how it fits into the overall workflow of the NCSDK.

Fast track: Let’s check a network using mvNCCheck!

You will learn…

- How to use the mvNCCheck tool

- How to interpret the output from mvNCCheck

You will need…

- An Intel Movidius Neural Compute Stick - Where to buy

- An x86_64 laptop/desktop running Ubuntu 16.04

If you haven’t already done so, install NCSDK on your development machine. Refer to the Intel Movidius NCS Quick Start Guide for installation instructions.

Checking a network

Step 1 - Open a terminal and navigate to ncsdk/examples/caffe/GoogLeNet

Step 2 - Let’s use mvNCCheck to validate the network on the Intel Movidius Neural Compute Stick

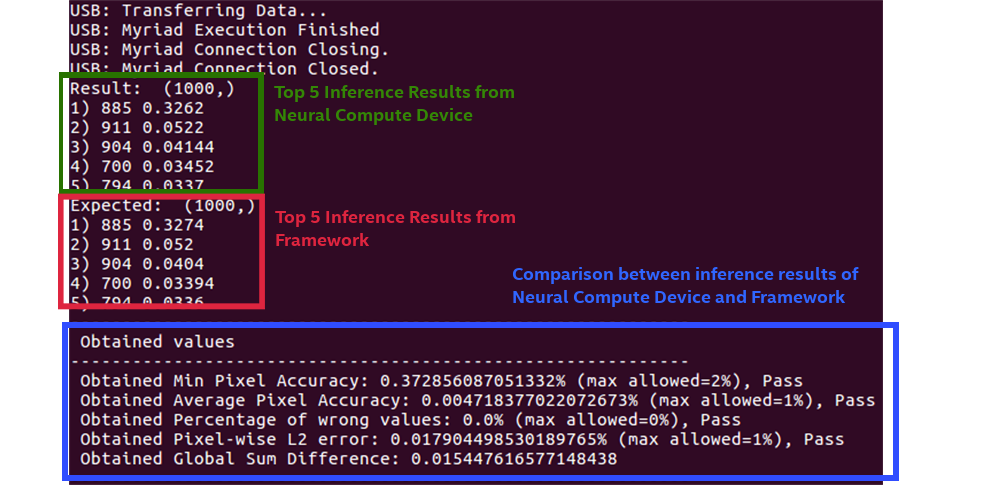

mvNCCheck deploy.prototxt -w bvlc_googlenet.caffemodelStep 3 - You’re done! You should see similar output to the one below:

USB: Myriad Connection Closing.

USB: Myriad Connection Closed.

Result: (1000,)

1) 885 0.3015

2) 911 0.05157

3) 904 0.04227

4) 700 0.03424

5) 794 0.03265

Expected: (1000,)

1) 885 0.3015

2) 911 0.0518

3) 904 0.0417

4) 700 0.03415

5) 794 0.0325

------------------------------------------------------------

Obtained values

------------------------------------------------------------

Obtained Min Pixel Accuracy: 0.1923076924867928% (max allowed=2%), Pass

Obtained Average Pixel Accuracy: 0.004342026295489632% (max allowed=1%), Pass

Obtained Percentage of wrong values: 0.0% (max allowed=0%), Pass

Obtained Pixel-wise L2 error: 0.010001560141939479% (max allowed=1%), Pass

Obtained Global Sum Difference: 0.013091802597045898

------------------------------------------------------------

What does mvNCCheck do and why do we need it?

As part of the NCSDK, mvNCCheck serves three main purposes:

- Ensure accuracy when the data is converted from fp32 to fp16

- Quickly find out if a network is compatible with the Intel Movidius Neural Compute Stick

- Quickly debug the network layer by layer

Ensuring accurate results

To ensure accurate results, mvNCCheck’s compares inference results between the Intel Movidius Neural Compute Stick and the network’s native framework (Caffe* or TensorFlow*). Since the Intel Movidius Neural Compute Stick and NCSDK use 16-bit floating point data, it must convert the incoming 32-bit floating point data to 16-bit floats. The conversion from fp32 to fp16 can cause minor rounding issues to occur in the inference results, and this is where the mvNCCheck tool can come in handy. The mvNCCheck tool can check if your network is producing accurate results.

First the mvNCCheck tool reads in the network and converts the model to Intel Movidius Neural Compute Stick format. It then runs an inference through the network on the Intel Movidius NCS, and it also runs an inference with the network’s native framework (Caffe or TensorFlow).

Finally the mvNCCheck tool displays a brief report that compares inference results from the Intel Movidius Neural Compute Stick and from the native framework. These results can be used to confirm that a neural network is producing accurate results after the fp32 to fp16 conversion on the Intel Movidius Neural Compute Stick. Further details on the comparison results will be discussed below.

Determine network compatibility with Intel Movidius Neural Compute Stick

mvNCCheck can also be used as a tool to simply check if a network is compatible with the Intel Movidius Neural Compute Stick . There are a number of limitations that could cause a network to not be compatible with the Intel Movidius Neural Compute Stick including, but not limited to, memory constraints, layers not being supported, or unsupported neural network architectures. For more information on limitations, please visit the Intel Movidius Neural Compute Stick documentation website for TensorFlow and Caffe frameworks. Additionally you can view the latest NCSDK Release Notes for more information on errata and new release features for the NCSDK.

Debugging networks with mvNCCheck

If your network isn’t working as expected, mvNCCheck can be used to debug your network. This can be done by running mvNCCheck with the -in and -on options.

- The -in option allows you to specify a node as the input node

- The -on option allows you to specify a node as the output node

Using the -in and -on arguments with mvNCCheck, it is possible to pinpoint which layer the error/discrepencies could be originating from by comparing the Intel Movidius Neural Compute Stick results with the Caffe or TensorFlow in a layer-by-layer or a binary search analysis.

Debugging example:

Let’s assume your network architecture is as follows:

- Input - Data

- conv1 - Convolution Layer

- pooling1 - Pooling Layer

- conv2 - Convolution Layer

- pooling2 - Pooling Layer

- Softmax - Softmax

Let’s pretend you are getting nan (not a number) results when running mvNCCheck. You can use the -on option to check the output of the first Convolution layer “conv1” by running the following command mvNCCheck user_network -w user_weights -in input -on conv1. With a large network, using a binary search method would help to reduce the time needed to find the layer where the issue is originating from.

Understanding the output of mvNCCheck

Let’s examine the output of mvNCCheck above.

- The results in the green box are the top five Intel Movidius Neural Compute Stick inference results

- The results in the red box are the top five framework results from either Caffe or TensorFlow

- The comparison output (shown in blue) shows various comparisons between the two inference results

To understand these results in more detail, we have to understand that the output from the Intel Movidius Neural Compute Stick and the Caffe or TensorFlow are each stored in a tensor (a more simplified definition of a tensor is an array of values). Each of the five comparison tests is a mathematical comparison between the two tensors.

Legend:

- ACTUAL – the tensor output by the Intel Movidius Neural Compute Stick

- EXPECTED– the tensor output by the framework (Caffe or TensorFlow)

- Abs – calculate the absolute value

- Max – Find the maximum value from a tensor(s)

- Sqrt – Find the square root of a value

- Sum – Find the sum of a value

Min Pixel Accuracy:

This value represents the largest difference between the two output tensors’ values.

Average Pixel Accuracy:

This is the average difference between the two tensors’ values.

Percentage of wrong values:

This value represents the percentage of Intel Movidius NCS tensor values that differ more than 2 percent from the framework tensor.

Why the 2% threshold? The 2 percent threshold comes from the expected impact of reducing the precision from fp32 to fp16.

Pixel-wise L2 error:

This value is a rough relative error of the entire output tensor.

Sum Difference:

The sum of all of the differences between the Intel Movidius NCS tensor and the framework tensor.

How did mvNCCheck run an inference without an input?

When making a forward pass through a neural network, it is common to supply a tensor or array of numerical values as input. If no input is specified, mvNCCheck uses an input tensor of random float values ranging from -1 to 1. It is also possible to specify an image input with mvNCCheck by using the “-i” argument followed by the path of the image file.

Examining a Failed Case

If you run mvNCCheck and your network fails, it can be one of the following reasons.

Input Scaling

Some neural networks expect the input values to be scaled. If the inputs are not scaled, this can result in the Intel Movidius Neural Compute Stick inference results differing from the framework inference results.

When using mvNCCheck, you can use the –S option to specify the divisor used to scale the input. Images are commonly stored with values from each color channel in the range of 0-255. If a neural network expects a value from 0.0 to 1.0 then using the –S 255 option will divide all input values by 255 and scale the inputs accordingly from 0.0 to 1.0.

The –M option can be used for subtracting the mean from the input. For example, if a neural network expects input values ranging from -1 to 1, you can use the –S 128 and –M 128 options together to scale the network from -1 to 1.

Unsupported layers

Not all neural network architectures and layers are supported by the Intel Movidius Neural Compute Stick. If you receive an error message saying “Stage Details Not Supported” after running mvNCCheck, there may be a chance that the network you have chosen requires operations or layers that are not yet supported by the NCSDK. For a list of all supported layers, please visit the Neural Compute Caffe Support and Neural Compute TensorFlow Support documentation sites.

Bugs

Another possible cause of incorrect results are bugs. Please report all bugs to the Intel Movidius Neural Compute Developer Forum.

More mvNCCheck options

For a complete listing of the mvNCCheck arguments, please visit the mvNCCheck documentation website.

Further reading

- Understand the entire development workflow for the Intel Movidius Neural Compute Stick.

- Here’s a good write-up on network configuration, which includes mean subtraction and scaling topics.

Why Survival Sim Frostpunk* is Eerily Relevant

The original article is published by Intel Game Dev on VentureBeat*: Why survival sim Frostpunk is eerily relevant. Get more game dev news and related topics from Intel on VentureBeat.

Six days. That's how long I survived Frostpunk*'s harsh world until the people overthrew me.

Eliminating homelessness and mining coal for our massive heat-emitting generator only got me so far — my citizens were overworked, sick, and out of food. The early press demo I tried was a short but acute example of the challenges Frostpunk has in store when it comes to PC on April 24. It's set in an alternate timeline where, in the late 19th century, the world undergoes a new ice age, and you're in charge of the last city on earth.

As the city ruler, you have to make difficult choices. For example, should you legalize child labor to beef up your manpower, or force people to work 24-hour shifts to keep the city running? These and other questions add a dark twist to the city simulation genre, and Frostpunk creator 11 Bit Studios* certainly knows how to craft anxiety-inducing decisions.

In 2014, the Polish developer released This War of Mine*, which was about helping a small group of people survive the aftermath of a brutal war. It was the company's first attempt at making a game about testing a player's morality. Frostpunk builds on those moral quandaries by dramatically increasing the stakes.

It gives you the unenviable task of keeping hundreds of people alive, a number that'll only grow as your city becomes more civilized.

"It's all about your choices: You can be a good leader or you can be a bad leader. But it's not as black and white as you'd think because if you're a good leader and you listen to your people, the people may not always be right. So this is why we thought this could be a really thrilling idea for a game. It shows how morality can be grey rather than black and white," said partnerships manager Pawel Miechowski.